HELPISHERE

What if humans would connect so deeply to their agents that they felt pain when they are suddenly gone?

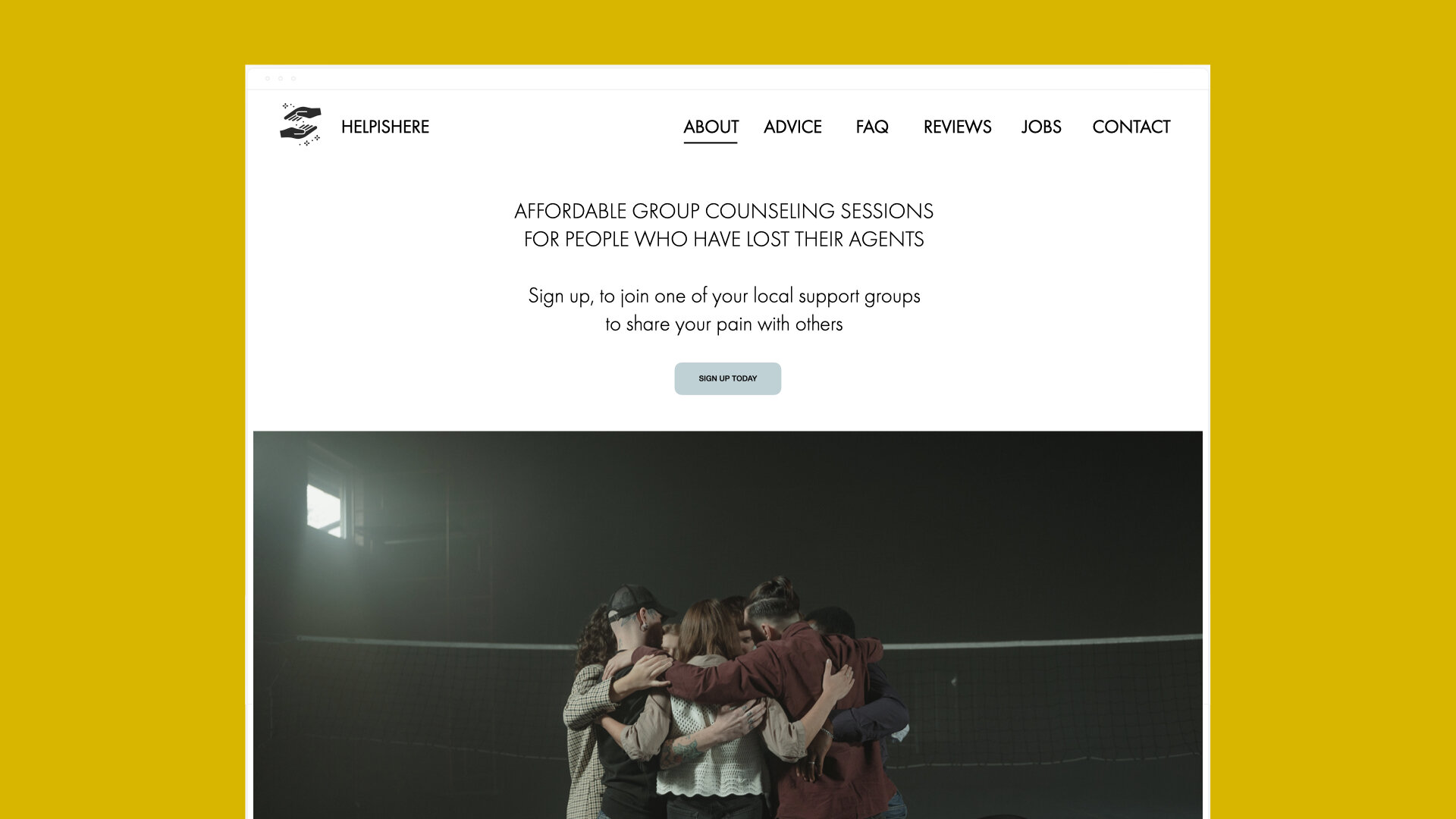

This fiction is a provocation around the consequences of humans creating too strong connections with human-like conversational agents.

Let’s listen to Sunnies experience after she has lost her agent:

As you can hear in Sunnies story - here the human seems to have created such a strong emotional connection and dependency on her agent that it leaves her heartbroken and depressed once the agent is suddenly gone.

Product life cycles coming to an end or a company discontinuing their products is something not unlikely to happen but shouldn’t cause such strong emotions.

While frustration seems like a reasonable reaction - a broken heart seems like an extreme one.

As agents get more and more human-like we believe there needs to be a way of reminding humans that they are interacting with a machine without disrupting the interactions and experience with the agent.

With this provocation we are addressing the danger of conversational agents adopting too many human-like features and missing reminders for humans that they are interacting with machines. Pointing out the importance of reflection about how deep we should let humans connect to conversational agents.